05 Feb 2025

Optimizing Resource Utilization for Interactive GPU Workloads with Transparent Container Checkpointing

Jupyter Notebooks and AI Chatbots are increasingly popular in scientific research and data analysis. Allocating expensive GPU resources is challenging due to unpredictable usage patterns. A team of researchers presented work on a novel solution to this challenge at FOSDEM 2025

FOSDEM 2025 took place in Brussels, Belgium

Oxford e-Research Centre DPhil student Radostin Stoyanov delivered a talk at FOSDEM 2025, alongside colleague Viktória Spišaková (PhD student at the Faculty of Informatics at Masaryk University, and IT architect at centre the CERIT-SC). Adrian Reber (Senior Principal Software Engineer at Red Hat) contributed to the research.

FOSDEM is a two-day event organised by volunteers to promote the widespread use of free and open-source software. FOSDEM 2025 took place at ULB Solbosch Campus in Brussels, Belgium. FOSDEM is recognised as one of the most prominent conferences of its type in Europe.

The talk was entitled “Optimizing Resource Utilization for Interactive GPU Workloads with Container Checkpointing”.

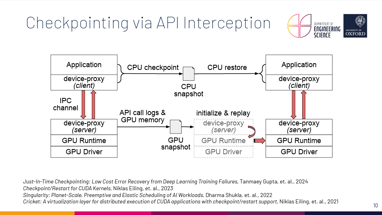

Interactive GPU workloads, such as Jupyter notebooks and generative AI inference are becoming increasingly popular in scientific research and data analysis. However, efficiently allocating expensive GPU resources in multi-tenant environments like Kubernetes clusters is challenging due to the unpredictable usage patterns of these workloads. Container checkpointing was recently introduced as a beta feature in Kubernetes and has been extended to support GPU-accelerated applications.

The talk presented a novel approach to optimizing resource utilization for interactive GPU workloads using container checkpointing. The approach enables dynamic reallocation of GPU resources based on real-time workload demands, without the need for modifying existing applications. The talk demonstrated the effectiveness of the approach through experimental evaluations with a variety of interactive GPU workloads and presented some preliminary results that highlight its potential.

Radostin Stoyanov commented on the work;

"Lowering the cost of AI training and inference by driving high resource utilization is crucial for cloud providers today. Our GPU checkpointing mechanism facilitates highly efficient and reliable execution of deep learning workloads by enabling dynamic and transparent preemption, migration, and elastic scaling."